A data-tier application is an entity that contains all of the database and instance objects used by an application. It provides a single unit for authoring, deploying and managing the data tier objects, instead of having to manage them separately through scripts. A Dedicated Administrator Connection (DAC) allows tighter integration of data tier development and gives administrators an application level view of resource usage in their systems.

Data Tier Applications can be developed using Visual Studio, and then be deployed to a database using the Data Tier Deployment Wizard.

You can also Extract a Data-Tier Application for usage in Visual Studio, or easily deploy to a different instance of SQL Server, including a SQL Azure instance.

Let see a step by step example

A) Extracting a Data Tier Application

- Suppose First server named as VIRENDRA1_SQL2012, and DB Name is as VIRENDRATEST

- Now from SQL Server Management Studio Object Explorer, right click on VIRENDRATEST on VIRENDRA1_SQL2012

- Click Tasks |Extract Data-Tier Application

- As the Data Tier Application Wizard displays, click Next

- In the Set Properties Window specify C:\VIRENDRATEST.dacpac

as the filename under Save to DAC package file

- If promped that the file is already existing, replace the existing file.

- Click Next

- On the Validation and Summary Page, click Next

- As the Data Extraction completes, click Finish.

You have now successfully exported a Database as a Data-Tier Application.

B) Deploying a Data Tier Application

- Suppose First server named as VIRENDRA2_SQL2012, and DB Name is as VIRENDRATEST

- In SQL Server Management Studio Object Explorer, Right-Click VIRENDRA2_SQL2012

- Select Deploy Data Tier Application

- As the Introduction Page displays, click Next

- In the Select Package Window Browse to C:\VIRENDRATEST.dacpac

- Select the Data Tier Application Package you previously exported

- Click Next

- As Package Validation completes, click Next on the Update Configuration Page

- Click Next on the Summary Page

- As Deployment completes, click Finish

You have now successfully deployed a Data Tier Application to a SQL Server Instance running on a different server.

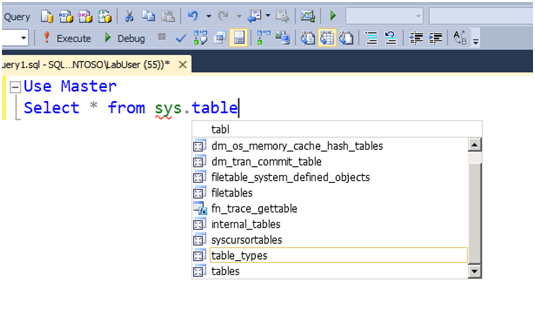

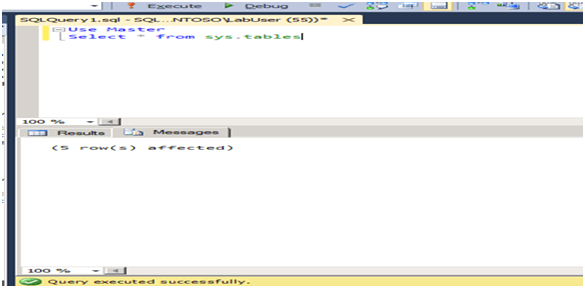

3) Code Snippets are an enhancements to the Template Feature that was introduced in SQL Server Management Studio. You can also create and insert your own code snippets, or Import them from a pre-created snippet library.

3) Code Snippets are an enhancements to the Template Feature that was introduced in SQL Server Management Studio. You can also create and insert your own code snippets, or Import them from a pre-created snippet library.