Now days, as it’s very common and normal trends, The DB size as 100~150…. n GBs are being considered the normal, and even many databases are crossing the terabyte range. In general practice commonly a DBA or sometime Developer starts backups in one process that take a long time and Database is continuing to grow, this back time will increase accordingly, to reduce the backup time, it’s always suggested take backup in Disk instead of tape in off hours. It’s my personal opinion always take backup to San Disk and then archive it to Tape. Disk capacity is matter because it’s directly proportional to costing/expense and to reduce backup size we can use compression. As we know taking a Full backup is very intensive I/O process as every data page in the database must be read and then written to another file. It’s my personal observation, having multiple physical disks, we can achieve higher I/O rates and complete the backup process more quickly with in addition to writing to multiple files, it is also helpful to write to different physical disks to alleviate the I/O bottleneck.

Here are steps, How to take Backup on multiple disks / multiple files using SSMS.

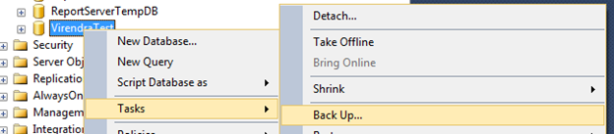

Step 1) Right Click on Database à Task à Back Up

It will shows screen as

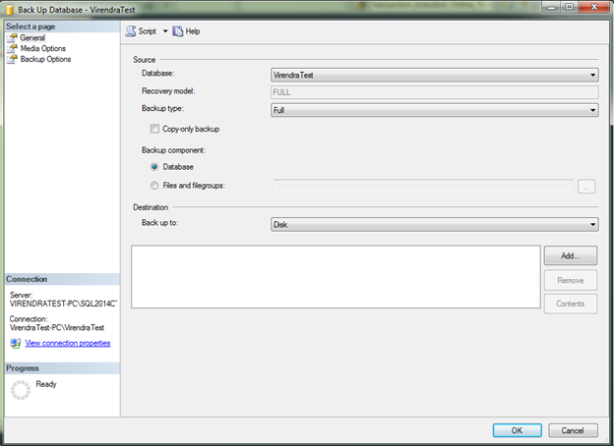

Step 2) Click on Add from Destination section and set there multiple physical disk with multiple files by clicking on ADD button again and again.

And finally suppose we have set 2 disk with 2 files it will show like as below

Step 3) Click on OK, Backup will start with 2 physical disks.

The sample script for this process will be as below,

BACKUP DATABASE [VirendraTest] TO DISK = N’C:\VirendraTestOnCdrive.bak’,

DISK = N’E:\VirendraTestOnEdrive.bak’ WITH NOFORMAT, NOINIT,

NAME = N’VirendraTest-Full Database Backup’, SKIP, NOREWIND, NOUNLOAD, STATS = 1

GO

Please suggest, your finding.

excellent..!! Then, how to restore these two backup files into one database.

LikeLike

Same way… adding these files.

LikeLike

Restore DATABASE [VirendraTest] From DISK = N’C:\VirendraTestOnCdrive.bak’,

DISK = N’E:\VirendraTestOnEdrive.bak’ with recovery

LikeLiked by 1 person

I don’t necessarily agree with the idea of backing up to the same rack or even the same room as the database. If a fire breaks out in the room, you can easily lose everything especially. Yeah… you might have a DR site but having an ace in the hole in the form of nearby Point-in-Time backups sure provides the nice warm fuzzies. Yes, you could backup to the main SAN and then copy them somewhere but what happens if something goes haywire in the meantime? It might be “good enough” for others but I see no need in taking the chance.

And, if you do it right, alternate storage is no more insecure, difficult, complex, or unscalable than the main SAN is. “It Depends” just like anything else.

I do agree (like I said in my first post) that trying to build separate areas in the SAN for separate files is mostly a losing proposition which also means that multiple file backups may or may not be a losing proposition depending on what the disk controller does. If you have the same read/write heads in play for two different files, it’s a losing proposition and vice versa.

As for partitioning, the only reason why anyone would be interested in improving the performance of backups is if the performance of the backups becomes a problem. In today’s day and age of nasty-fast hard-drives and compressed backups, there can only be one real reason for slow backups (provided the pipe to the drives is ok) and that’s a ton of data. While I agree that setting up partitioning is no cake walk (and if you think it is, you’ve probably done it wrong), if you’re worried about backup performance then you should also worry about other things having to do with a ton of data like maintenance performance, online piece-meal restores, being able to backup all the current data in just a couple of minutes, etc, etc. That also means that you can’t just be an average DBA anymore. You need to press the envelope a little and learn how to do the advanced stuff like partitioning, etc.

So, some things I agree on, some I disagree on, but it’s all food for thought. That’s half the purpose of any decent article … get people talking about the problems and see what they think.

LikeLike

Hi Jeff, Got your comment after long time. Agree with you.

LikeLike

logically is it good,,,,,,,,,,,,,,?????

LikeLike

Good idea and good to-the-point article. Thank you for taking the time to write it.

I’d like to add a couple of words of warning and a different, perhaps even more effective method…

Words of warning…

If you backup to the same SAN as where your MDF/LDF/NDF files are located, you are taking a great risk because if the SAN goes down, your data is lost and so are your backups. I’d recommend backing up to slower disks if that was the only other choice than backing up to the same SAN where the SQL Server database files live.

Backing up to multiple physical disks can be a great help performance wise BUT, if your backing up to a SAN, what may appear to be separate physical disks to you may not actually be separate disks. You’d have to work very closely with your SAN admin to make such a thing happen and, considering the size of individual disks nowadays, I wouldn’t expect much cooperation there.

======================================================

A Different and Even More Effective Method…

Shifting gears, let me ask you a question. When you do the laundry, do you wash ALL the clothes in the house or just the dirty ones?

Databases are very similar. In most cases, at least 50% of the data (or as high as 99%+ in a lot of cases) is totally static either due to its use (lookup/reference tables) or mostly static (temporal audit tables including transaction tables, as well) because of its temporal nature.

For example, one of my databases recently climbed over the 340GB mark. Oddly enough, it’s for a telephone system where I absolutely have to keep the call recordings in the database itself. Equally as odd, all but 1GB of that is tied up in the single CallRecordings table. Because I do “long haul” backups to slower NAS disks instead to the SAN, it was taking 8 to 10 hours to do the backups. The table has more than 5 years worth of data in it and the only thing I really needed to backup every night is the current month of data.

By partitioning the table by month (didn’t want to do it by week but could have) with 1 filegroup/file for each month, I was able to set 63 months of data to READ_ONLY and only backup the current month (after backing up the R/O partitions one final time). Basically, I’m now only backing up 0 to 1/64th of the database each night. My “full” backups now average somewhere between 6 and 9 minutes for a 340GB database and I’m still using SQL Server 2005 where there’s no compression!!! My Tape Backup Administrator loves me because it also means there’s a whole lot less time and tape the he has to lug around.

And for all you folks out there with “only” the Standard Edition that think you can’t also do such a thing, I did the same thing for a side job that I took for a customer that had a 200GB table that was not only blowing up the backups but was killing their total disk space because of how big the log file would grow when they had to reindex the table. I did it with “Partitioned Views”, which are actually easier (so far as I’m concerned) to maintain than “Partitioned Tables” in the Enterprise Edition.

There’s also a wealth of other benefits (index maintenance being one of them) to either form of partitioning but it absolutely rocks for reducing backup times on temporally based “WORM” tables like audit table, invoice detail tables, etc, etc.

LikeLiked by 1 person

Hi Jeff,

Thanks for your valuable advise.

Love to invite you on board as author. Please think and let me know.

I always respect your suggestions and try to implement in day to day activities.

Thanks a lot and always wants your valuable help.

Thanking You

Virendra

LikeLike

Some points to consider

1. having multiple backup files for the sake of higher IO throughput adds complexity and risk without always getting the IO throughput gains.

2. Most corporate storage have multiple SAN deployments optimized for different workloads. Backups should go to IT managed SANs whether it’s the same rack as the prod DB or not, is immaterial. Protecting the SAN and meeting the DBA’s SLA is the SAN admin’s job. You should make a secondary copy off the primary backup location but that’s independent of the primary backup target.

3. In an environment where you can suffer a catastrophic SAN failure and don’t have a dependable DR solution, chances are your alternate storage is at greater risk of failure, theft, etc…

4. Using alternate storage introduces additional risk, effort, complexity and is not scalable.

5. If you think you need to carve out physical disks for your backups in a SAN, then your SAN is probably not configured/sized correctly. In that case, get the SAN vendor to send someone with SAN and database expertise (send the generic SAN expert home). Physical isolation on SANs are implemented by design for fault tolerance. The rare occasions when done for performance is when you have extremely high loads (think top 10-15% of the world). If you’re in the top 5%, you’ll be moving off SANs.

6. Partitioning is a great solution but I won’t recommend it as a general purpose solution. It’s non trivial to design, implement and manage correctly. Failure on any one part can cause problems that go unnoticed for a long time. Experts like Jeff know the technology inside out but for the average DBA, it’s a disaster waiting to happen.

If you have IO contention, fix that. Introducing workarounds that require additional care and feed seem cool on the surface but in reality, you’re adding unnecessary complexity, admin overhead and risk. Get the storage admins onboard, educate them on your requirements then get them committed to your SLA.

LikeLike

Hi,

Thanks for your valuable comments, agree with you.

Dear Jeff, think this guy is correct on some of points (:

Virendra

LikeLike